SLAM

Imagine it's 1733, you get off the coach somewhere in Siberia. The reason for your stay is to create a map of the countryside no one has ever mapped yet. Robots usually face a very similar task when they wake up without any information about their surrounding but are required to automatically generate a map for later navigation. The actual question to be solved is: How to localize yourself without having a map and how to create a map without knowing your location? Thus, the goal is to perform Simultaneous Localization and Mapping (SLAM). The core idea of SLAM is to imitate the human way of sensing space e.g. while moving around a building and observing it.

INTRODUCTION

Measuring the Unknown

In the early 90s, NASA proposed an approach using cameras on a Mars rover and a novel image analysis algorithm, which enables SLAM from digital photographs. Since then, hundreds of research studies have been published on this topic. Initial applications have been in the area of inspection of historical sites, contaminated areas or remote sensing, where the 3D model of some city is of interest. After the rise of Augmented Reality, SLAM gained massive impetus because of the ability to visualize virtual scenes according to the camera position and orientation computed nearly at the same time. Moreover, all kinds of autonomous vehicles rely on SLAM in order to keep on track in GPS-devoid environments such as tunnels or urban areas. More recently, service robots utilize SLAM algorithms which enable automated transport of goods, people guidance and intelligent obstacle avoidance.

We at GESTALT provide SLAM solutions for off-the-shelf sensors, which are common for cost-optimized robots. Enhancing state-of-the-art SLAM with our proprietary algorithms leads to higher accuracy and a broader application spectrum. Additionally, the integration of semantic perception algorithms as introduced in Object Detection enables the robots to understand the environment that’s being mapped. Let’s find out together, if our way of approaching SLAM can help you out and bring value to your company.

Construction of point cloud using depth data provided by the sensor and by performing visual odometry to track position of the camer - in this case a low cost unit (RealSense ZR300).

Using multiple camera images which can observe and track the same object points enables to compute the positions and orientations of each single camera.

TECHNOLOGICAL OUTLINE

Technology behind Visual SLAM

The main idea behind SLAM is to analyze images with respect to the underlying camera movement. This relies on the fundamental research insights from the last years in the area of computer vision. Most SLAM approaches rely on the detection and tracking of distinct image patterns like corners.

Having two photographs of the scene from two different positions allows to compute the relative change in orientation and the translation direction. When all frames from a video are processed this way, we can sum up each small relative translation and rotation, the pose, which gives the final camera pose in the very last photograph. Note, that when just standard camera images are used, it is not possible to reconstruct the length of the translation vector between two images. For the same reason, special effects in old movies like Godzilla used to build miniature models of buildings and trees to provide the illusion of huge size-monsters.

So how to deal with scale ambiguity?

Some SLAM systems utilize the so called inertial-measurement-unit (IMU), which is a combination of an accelerometer and a gyroscope. An accelerometer gives an acceleration vector and the gyroscope the rotation velocity. This data is particularly interesting for SLAM, because it is metric and helps to estimate the length of the translation between two images and not just the movement direction. Unfortunately, the integration of the high rate IMU measurements and the images into a consistent movement estimation result is hard. The mathematical models require high level of expertise and – what is much worse – cumbersome calibration procedures combined with high cost IMUs for increased accuracy.

The figure below shows the error of position estimation from a mid-class IMU by pure integration of accelerometer (2x times) and gyroscope data (1x). Given the fact that most applications require an accuracy greater than one centimeter, the result becomes unacceptable after only a few seconds. The error is drastically reduced with a fusion of image-based SLAM and IMU. However, one needs to understand the underlying hardware very well, which is usually not achieved for low-cost devices.

The scale ambiguity can also be resolved by detecting objects in the images, of which the scale is known. It is also possible to rely only on markers and to compute the camera position and orientation in each image independently, which however requires the markers to be observed instantly.

A rather less popular way is to apply a standard SLAM approach and to integrate the sparse marker observations for global position correction. This means, if the camera observes the marker at time T, then all pose estimations before that time and in the future are affected by this measurement. We at GESTALT apply this approach to increase the localization accuracy of our robots in indoor and outdoor areas, where it is possible to place a few markers at some points. Finally, probably an intuitive but difficult approach is to detect every-day objects such as bottles of known size and to use them to improve SLAM systems with only one camera. When we apply this concept, we further increase the application range of low-cost cameras for visual navigation. This approach and SLAM in general have huge impact on many robotic applications illustrated in the next paragraph.

Detection of same object points in successive images enables to reconstruct the camera movement between the two images.

Processing a video enables to reconstruct the full camera movement path and the underlying 3D environment.

Error of the trajectory estimation over time (sec) when only IMU data is used.

Detection of objects and integration of their known scale helps SLAM using a single camera and to infer the scale of the environment correctly.

APPLICATIONS & USE CASES

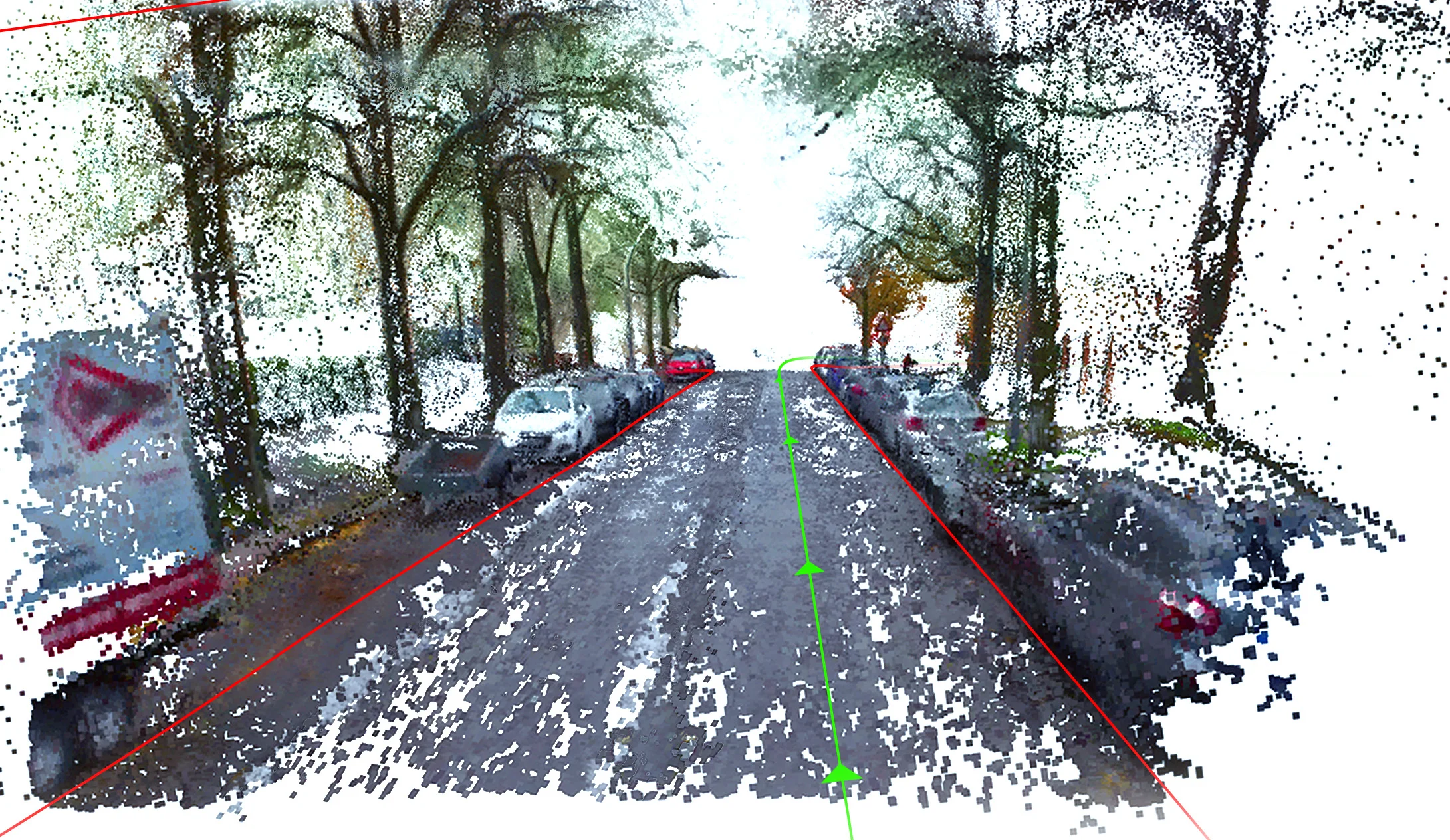

Autonomous Driving

Autonomous vehicles are required to localize themselves precisely in order to plan the driving route. An error of one meter might lead the car to the wrong lane or even worse. Current prototypes of autonomous vehicles rely on SLAM from cameras as well as laser scanners. Besides research at academic and industrial level, SLAM is already integrated by huge mapping companies. These companies typically combine laser-data with visual SLAM in order to build dense high resolution maps. Interestingly, the success of Deep Learning approaches for object recognition in images enables to relax the localization accuracy requirements since it is mostly sufficient to detect street boundaries or parking slots relative to the car but not on the global world map.

Combining SLAM and dense 3D Reconstruction enables the generation of high definition 3D maps from moving cameras mounted on a vehicle.

Automated guided vehicles (AGVs)

In logistics, automated guided vehicles support the transport of goods, packages and containers. Standard solutions are relying on magnetic elements in the ground or colored lane markers. Flexible vision-based SLAM systems would however reduce the setup costs and allow to reprogram the robots without adoption of the physical environment.

Service Robotics

Meanwhile, SLAM research is a promising field in order to enable more intelligent navigation for service robots, e.g. robotic vacuum cleaners. Early versions bumped into obstacles and were navigating more randomly than following a plan. With SLAM, the robots are enabled to optimize the cleaning routes and also to avoid obstacles more intelligently.

Recently, service and social robots appeared on the market, which initiated many discussions about the future of robots in households, hospitals, in stores and much more. Currently however, most of the available robots use wheel rotation sensors to estimate their location, which is very inaccurate. GESTALT evaluates the quality of its SLAM system on such robots, since this is the most challenging operation area due to low-cost sensors and actuators.

Simultaneous Localization and Mapping: Robust Natural Navigation via Markerless SLAM - Real-time 2D-Mapping via LIDAR - Live automatic Map-Correction - Connection-Capabilities: WIFI, LTE & 5G - ROS compatible.

In this video we can observe the depth and RGB images captured by a SICK Visionary T and a low-cost RGB camera respectively. These are mounted side by side on an AGV and roughly aligned. The depth images are used to create a 3D point cloud, which is colorized on its Y axis.

CONCLUSION

SLAM is a corner stone of mobile robotic systems developed at GESTALT Robotics. It enables the robots to move while measuring and controlling the movement. We integrate state-of-the-art techniques and extend them for low cost sensors, which results in high accuracy localization. The next logical level is the control of the robot movement, to navigate around obstacles and to plan further movement strategies based on object recognition from images. This is introduced in Path Planning. Get in touch with us to discuss your use case and how it can benefit from SLAM. You can contact us under info@gestalt-robotics.com or give us a call at +49 30 616 515 60 – we would love to hear from you.