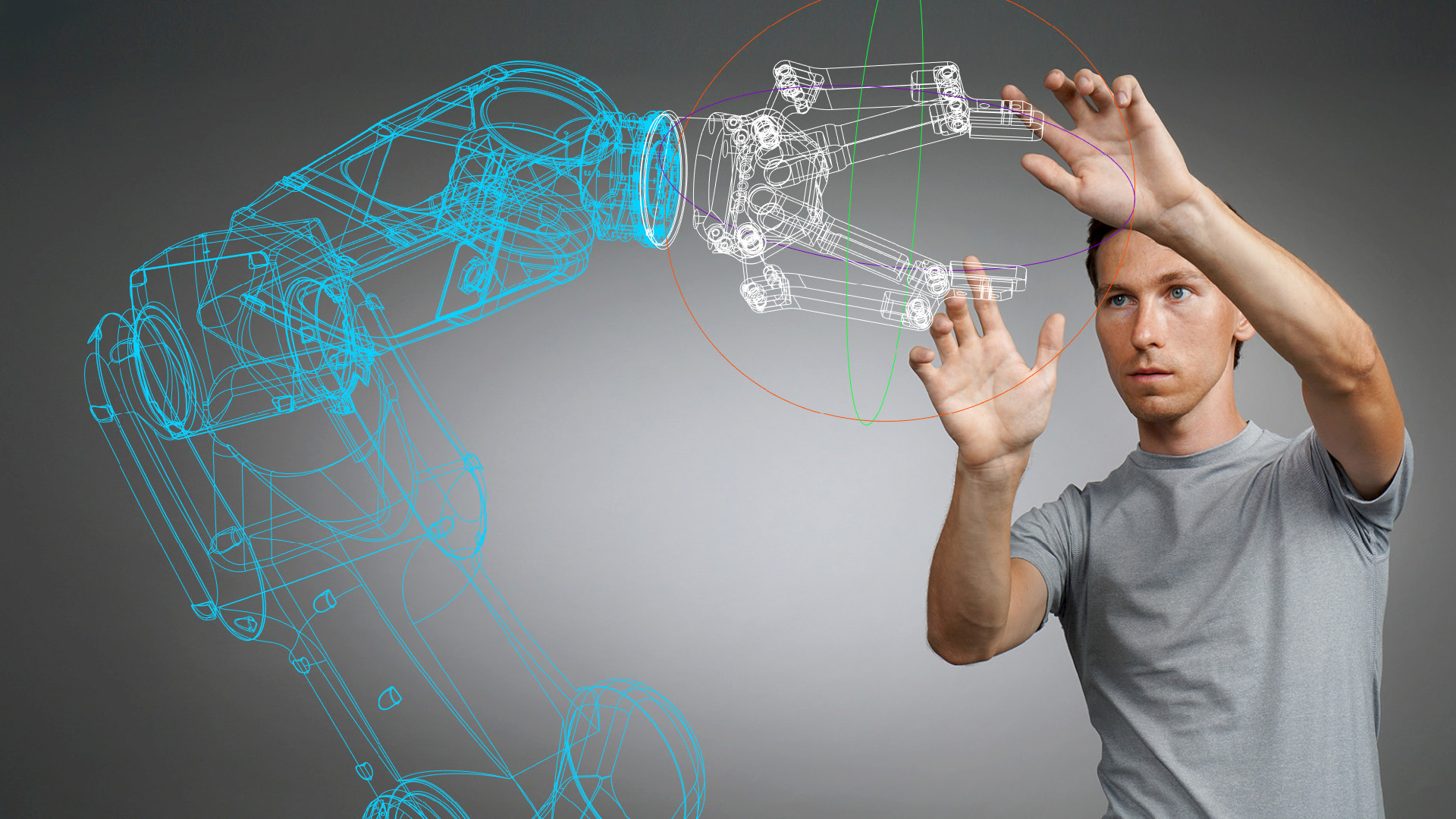

Gesture-based Interaction

In addition to facial expressions and haptics, gestures are a type of intuitive nonverbal human communication and can be used in combination with or independent from other types and modalities of communication. Gestures are characterized as intended movements, usually using arms and hands, with a manipulative or communicative character.

INTRODUCTION

Role Model: Human Communication

In the simplest case, a command gesture for a human-machine-interaction system consists of a single pose, e.g. pointing towards a certain direction. Manipulative gestures involve direct interaction with real or virtual objects through movements of the user. In contrast, communicative gestures are more abstract. Command gestures are communicative gestures, which are used in control systems for triggering individual functions. Complex command gestures should have a contextual connection between hand trajectory (symbol) and control task. Complex gestures with contextual relation are more intuitive, easier to learn and are preferred in the design of a gesture-based control system.

We provide tailored gesture control interfaces for robotic applications. Furthermore, we provide the development of multi-modal interfaces for fair exhibits.

We at Gestalt are experts and pioneers in designing gesture-based user interfaces for robotics. We hold numerous publications dealing with gestural interaction.

TECHNOLOGICAL OUTLINE

Gesture Recognition Systems

The main component of a gesture recognition system is a sensor for detecting the gestures. The detection can be achieved by measuring electrical, optical, acoustic, magnetic or mechanical stimuli. Depending on the measuring principle and method, one may need sensors or additional aids attached directly to the user's body or clothes. Visual measurement principles often rely on the additional use of artificial features, such as markers, to support robustness. Further processing steps typically involve algorithms for segmentation, tracking, and feature extraction. Subsequently, pattern recognition is used for the detection of a known gesture. Pattern recognition is supported by stochastic procedures like Dynamic-Time-Warping or Hidden-Markov Models. Finally, the recognized gestures are translated into control commands for the robotic system. Within most use cases, it makes sense to add a specific feedback function if a gesture is successfully recognized.

Processing sequence for task learning by demonstration

Sequence of camera-based gesture recognition

APPLICATIONS & USE CASES

Programming by Demonstration

A comprehensive approach to ease the programming of industrial robots and make it more intuitive can be derived from the “Programming by Demonstration” principle. This paradigm comprises learning by imitation with the objective of transferring human capabilities to the robot. This field of engineering often includes the disciplines of artificial intelligence, image processing, path planning and motor control. In general, a type of sensor is used to derive a robot program automatically or by means of a prescribed rule which imitates certain actions. The demonstration and imitation can be done on different abstraction levels and using different tools. The subject of imitation can therefore be both individual poses and movements, motion sequences from learning, and processes or tasks (task learning from demonstration).

Visualization of the initial scene (top left), the translation process of individual workpieces by demonstration (top center), the execution of the task by the real robot (bottom left and center) and completion of the task (right)

CONCLUSION

Gesture-based Control: Intuition vs. Frustration

With the help of gesture, one can build intuitive and efficient control gestures. But that does not mean that it makes sense to use gesture for every kind of control function. Primarily, gesture-based control can get frustrating if the gesture recognition is lacking robustness or if you have to learn symbolic gestures as vocabulary. Consequently, there has to be a proper combination of experience and expertise to design adequate gestural control systems.

We at Gestalt believe that gestures and natural communication will be an important corner stone for future interaction systems in robotics, giving non experts the opportunity to control complex machines and processes as well as boosting efficiency and productivity. Get in touch with us to discuss your use case and how it can benefit from gesture-based user interfaces. You can contact us under info@gestalt-robotics.com or give us a call at +49 30 616 515 60 – we would love to hear from you.